ディープラーニングの畳み込みニューラルネットワーク(CNN)の中間層の出力を可視化してみました。可視化に使用したのは、5種類の花の分類に使用したVGG16を転移学習したモデルです。VGG16は、ディープラーニングによる画像応用の代表的なモデルの一つです。

VGG16(転移学習)モデルの中間層を可視化してみる。

In [1]:

import os

import keras

from keras.preprocessing.image import ImageDataGenerator

from keras.models import Sequential, Model

from keras.layers import Input, Dense, Dropout, Activation, Flatten

from keras import optimizers

from keras.applications.vgg16 import VGG16

In [2]:

keras.__version__

Out[2]:

訓練画像、検証画像、テスト画像のディレクトリ

In [3]:

# 分類クラス

classes = ['daisy', 'dandelion','rose','sunflower','tulip']

nb_classes = len(classes)

batch_size_for_data_generator = 20

base_dir = "."

test_dir = os.path.join(base_dir, 'test_images')

test_daisy_dir = os.path.join(test_dir, 'daisy')

test_dandelion_dir = os.path.join(test_dir, 'dandelion')

test_rose_dir = os.path.join(test_dir, 'rose')

test_sunflower_dir = os.path.join(test_dir, 'sunflower')

test_tulip_dir = os.path.join(test_dir, 'tulip')

# 画像サイズ

img_rows, img_cols = 200, 200

VGG16モデル

In [4]:

input_tensor = Input(shape=(img_rows, img_cols, 3))

vgg16 = VGG16(include_top=False, weights='imagenet', input_tensor=input_tensor)

#vgg16.summary()

VGG16モデルに全結合分類器を追加する

In [5]:

top_model = Sequential()

top_model.add(Flatten(input_shape=vgg16.output_shape[1:]))

top_model.add(Dense(256, activation='relu'))

top_model.add(Dropout(0.5))

top_model.add(Dense(nb_classes, activation='softmax'))

model = Model(inputs=vgg16.input, outputs=top_model(vgg16.output))

model.summary()

In [6]:

model.compile(loss='categorical_crossentropy',optimizer=optimizers.RMSprop(lr=1e-5), metrics=['acc'])

学習結果を読み出す

In [7]:

hdf5_file = os.path.join(base_dir, 'flower-model.hdf5')

model.load_weights(hdf5_file)

In [8]:

import matplotlib.pyplot as plt

In [9]:

%matplotlib inline

実際にテスト画像を分離してみる

In [10]:

import numpy as np

from keras.preprocessing.image import load_img, img_to_array

from keras.applications.vgg16 import preprocess_input

In [11]:

filename = os.path.join(test_dir, 'sunflower')

filename = os.path.join(filename, '3681233294_4f06cd8903.jpg')

filename

Out[11]:

In [12]:

from PIL import Image

In [13]:

img = np.array( Image.open(filename))

plt.imshow( img )

Out[13]:

In [14]:

img = load_img(filename, target_size=(img_rows, img_cols))

x = img_to_array(img)

x = np.expand_dims(x, axis=0)

predict = model.predict(preprocess_input(x))

for pre in predict:

y = pre.argmax()

print("test result=",classes[y], pre)

出力する中間層を選択する

In [15]:

layer_outputs = [layer.output for layer in model.layers[2:19]]

layer_outputs

Out[15]:

中間層を出力するモデルを作成する

In [16]:

activation_model = Model(inputs=model.input, outputs=layer_outputs)

activation_model.summary()

入力画像を確認する

In [17]:

img = load_img(filename, target_size=(img_rows, img_cols))

x = img_to_array(img)

x = np.expand_dims(x, axis=0)

img_tensor = x/255.

print(img_tensor.shape)

In [18]:

plt.imshow(img_tensor[0])

plt.show()

中間層を出力するモデルの予測を実行する

In [19]:

activations = activation_model.predict(img_tensor)

In [20]:

print(len(activations))

print(activations)

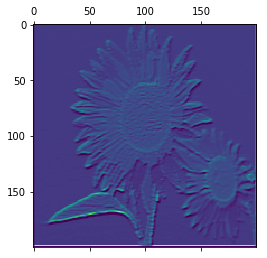

最初の畳み込み層の活性化を可視化する(一部チャンネルのみ)

In [21]:

first_layer_activation = activations[0]

print(first_layer_activation.shape)

In [22]:

plt.matshow(first_layer_activation[0, :, :, 4], cmap='viridis')

plt.show()

In [23]:

plt.matshow(first_layer_activation[0, :, :, 63], cmap='viridis')

plt.show()

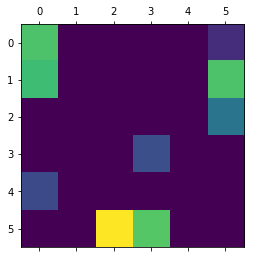

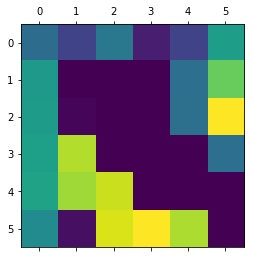

最後の畳み込み層の活性化を可視化する(一部チャンネルのみ)

In [24]:

last_layer_activation = activations[16]

print(last_layer_activation.shape)

In [25]:

plt.matshow(last_layer_activation[0, :, :, 0], cmap='viridis')

plt.show()

In [26]:

plt.matshow(last_layer_activation[0, :, :, 510], cmap='viridis')

plt.show()

<参考>

Francois Chollet 著、株式会社クイープ 翻訳、巣籠悠輔 監訳、「PythonとKerasによるディープラーニング」、マイナビ出版